The integrity and reliability of research hinge on the trustworthiness of its sources.

As Generative AI continues to grow in popularity and continues to evolve, examining the interplay between this technology and research publications underscores the essential role of citations in establishing the credibility of large language models (LLMs). Accurate citations ensure that advancements are grounded in verified knowledge, fostering innovation, and preventing the spread of erroneous information.

The key question is then: how can we ensure this accuracy?

Below, we are outlining how advancing research technology, including our Smart Citations and Scite Assistant, offer quality control, address trust-related concerns, and bring order to LLMs.

The Technology Trust Gap

Trained on huge corpuses of text, Generative AI applications, such as ChatGPT, can produce impressive and coherent text. These applications form the backbone of advancements in natural language processing (NLP), transforming the way we access and interact with information.

The many researchers who have used AI tools during the last year or so have come to appreciate their efficiencies and power in helping with a variety of tasks across the research and innovation workflows. At the same time, this same cohort has also begun to understand some of the challenges, specifically regarding transparency and trust, these technologies additionally pose.

These challenges, though daunting, are not unfamiliar (search and discovery technologies have faced similar issues before). While new obstacles arise, we also encounter long-standing difficulties with any new technology and information.

New Tech, Old Problems

Citations have proved pivotal in many stages of new technologies and in new ways of communicating and publishing information. Historically, they have been critical in helping to organize information, such as the Web, Wikipedia, and now LLMs.

Flash back to the early nineties.

In comes powerful new technology in the form of web browsers and search engines, emerging and changing the world, much like with our current experience with ChatGPT. We’re also starting to have personal computers and be able to interact with massive amounts of information. You can look up anything on anything produced by anything. From AltaVista to Aliweb to Ask Jeeves: these were how we got answers from all these new sources of information from the World Wide Web.

And, of course, those aforementioned challenges existed here.

How do we know what to trust? Is Jeeves going to give us the best article? Is it the most relevant? Is it something that an unknown someone produced? Or is it from the New York Times?

These issues, stemming from information overload and organization, are very reminiscent of where we are today with LLMs and ChatGPT. And we're still trying to figure out what is the best way to do this right.

Trusting Wikipedia: A Crowdsourced Encyclopedia

Another example of a time we’ve had to contend with a powerful new technology or a way of looking at the information: Wikipedia. While we can take it for granted at this stage, it’s actually pretty remarkable.

This is a crowd-sourced encyclopedia by strangers across the world on almost any topic. And yet, in many cases, it's more or less trustworthy.

So, how are we able to rely on people, qualifications and credentials unknown, to put “source-able” content up on the web? How has Wikipedia been able to exist in this way, and that we can trust it for the most part?

This query brings us to yet another point: how can we trust ChatGPT?

Citations As AI Trust Markers in Research

ChatGPT is exceedingly powerful and easy to use for a plethora of tasks. We’re using it for coding, writing, and information retrieval.

But how can we have confidence any of this is accurate?

Knowing the history of these webpages from the early days of the internet, how can we trust the sources from which LLMs are pulling? And, in general, how can we trust the outputs that LLMs are producing?

This is where we look for trust markers.

Citation Ranking: Bringing Order to The Web

Here is an excerpt from the 1998 PageRank Paper:

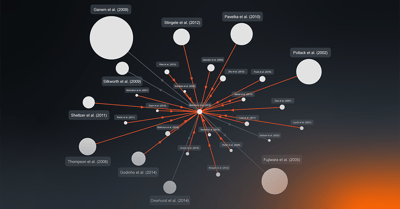

Written by the founders of Google, this fundamentally helps to organize the web. Sergey Brin and colleagues, inspired by citations and academic citations, analyzed how they could utilize what’s being done in research publishing to better inform how we rank the web.

This self-titled paper was a milestone point in what allows us to go from this massive amount of chaotic web information to some semblance of order.

Simplified PageRank Calculation

With PageRank, as you can see above, higher ranking web pages will lend more credibility if they cite others. This provides us with an initial marker of trustworthiness. As time has gone by, this has grown extremely sophisticated, but that initial adaptation of citations from research papers to examining web pages is what really brought quality control into the spotlight.

If you look at Wikipedia again, you can see that key theme of citations. When contributing content: you write a sentence, you make a claim, and if that claim needs to be backed up, you will see the phrase “Citation Needed.”

The reason Wikipedia works is that we add citations to claims so moderators and readers can fact check them, therein building some trust.

Citations in Large Language Models

Just as Wikipedia would be hard to trust without citations, much is the same with ChatGPT, and other LLM applications. We need to be able to verify, click through, and dig deeper into the articles to better understand credibility, relevancy, and impact.

Therefore, it’s important to consider the various implementations of citations in LLM chatbots.

Let’s take a look at Gemini.

The prompt: what are the causes of the misinformation?

If you ask this on Gemini, you’ll see little icons within the answers. Clicking those will open citations.

In the case above, the answer references NCBI, a research article. This builds trust in this response.

Now, let’s look at Copilot.

Using the same prompt, we can see a different style of output. Now those citations are along the bottom, similar to footnotes in certain academic papers.

Finally, we’ll look at Perplexity. This time, next to each answer is a footnote or a citation.

These examples all demonstrate the crucial role of citations when evaluating generative outputs from LLMs. Citations enhance trustworthiness by allowing users to verify information so they can be more confident in the reliability and integrity of the outputs.

Raising Standards in Research

The idea of Scite was born out of a paper.

In 2012, a widely discussed report was prepared by two executives at Amgen. It revealed that over a decade, they attempted to validate 53 major cancer studies in-house and found that most of these studies could not be replicated. They termed this issue a “reproducibility crisis.” Similar findings were reported by Bayer, which observed reproducibility challenges not only in cancer studies but also across various other indications.

This “reproducibility crisis” also translates to a crisis in trust in research. There are citations, but is that enough?

It’s possible that ChatGPT can produce bibliographies and lists of real journals, complete with real-looking DOIs and authors that are probably real in most cases, but the articles themselves don’t actually exist. We’ve seen students increasingly request articles from librarians that don't exist, meaning people are utilizing information that has been hallucinated or is just untrustworthy.

In our space of research, utilizing this information could impact social policy or could have passed clinical decisions.

This is why we developed the next generation of citations and applied them to our tool Scite Assistant, providing researchers with richer context around scholarly content and instilling better trust LLMs, specifically ChatGPT.

Scite Assistant brings together the flexibility and ease of use of LLMs with the trust of peer-reviewed articles and those next generation Smart Citations. Answers to prompts come directly from research articles and users can see how they’ve been cited. It’s quick and easy to compare citations statements and better utilize the world’s knowledge, whether you’re a student, industry professional, or even just pursuing personal areas of interest.

Large Language Model Experience for Researchers

The north star of Scite Assistant is to build out control and trust. Our tool combines the power of large language models with our unique database of Smart Citations to minimize the risk of hallucinations and improve information quality with real references.

We validate ChatGPT's output against research articles and scholarly literature to ensure accuracy and reliability. Assistant also provides valuable insights into the application of citations and quality control, fostering trust in AI. Use Assistant to develop search strategies, build reference lists for new topics, write compelling marketing and blog posts, and much more. With observability at its core, Assistant helps you make more informed decisions about AI-generated content.

Try it today and elevate your work with confidence! You can also register to watch our ChatGPT for Science webinar series, available on demand. Dive into further discussions about navigating quality control in ChatGPT and explore the technical aspects behind the scenes of Scite Assistant.